Wait, so using db_multirow with -cache_key is not recommended?

Using the -cache_key option with db_* functions is generally problematic because the SQL queries can combine data from multiple tables. When data in these tables is added, updated, or deleted, the cached data can become inconsistent, resulting in discrepancies between cached and uncached queries. The developer is responsible for flushing the cache in these cases, which can be challenging.

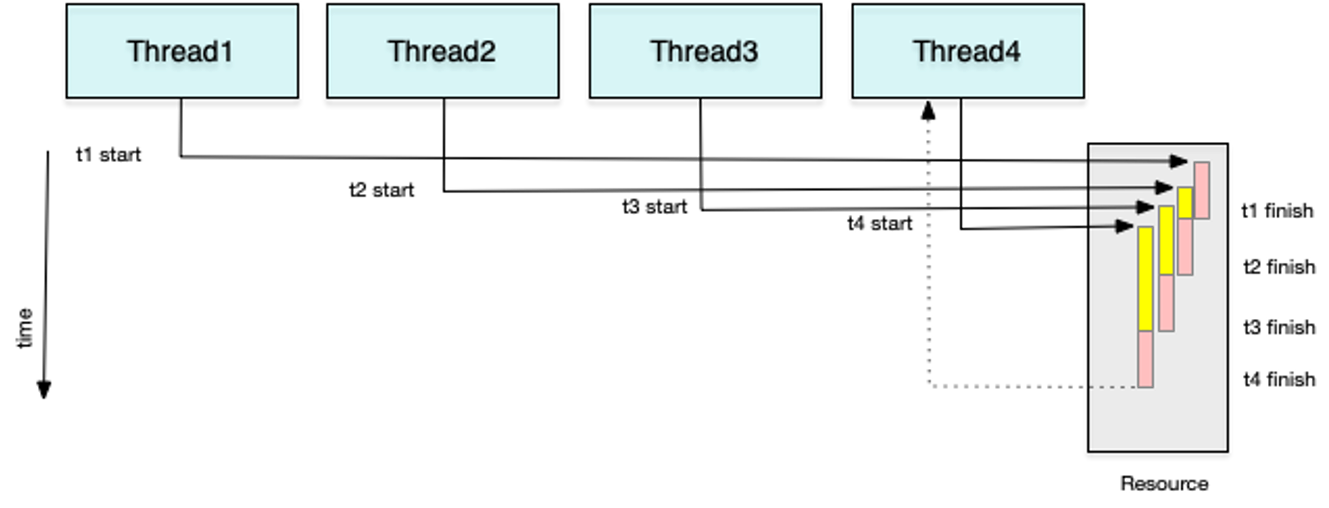

If a single cache is used for many queries, it can become overloaded (blocked requests), containing numerous entries, and potentially locking the entire system to a standstill. Wildcard cache flushes are particularly problematic in such scenarios (see above in this thread).

Explicit flushing can be avoided by limiting the cache time (e.g., to 5 minutes) and accept temporary inconsistency, similar to the "eventually consistent" model from high-availability systems. This approach may be suitable for some applications, but not for others, where users expect immediate updates after changing some content.

For certain applications, using -cache_key might be acceptable, but it’s not ideal for building scalable systems. By "scalable," I mean caches with hundreds of thousands of entries, processing hundreds of requests per second, each triggering hundreds of locks, reaching peaks of 500,000 locks per second (measured in real OpenACS applications). Most of these locks are from ns_cache or `nsv' .

In particular, I want to report how old a cached result is. OpenACS doesn’t store that information, so I stored it in an ancillary nsv. But this isn't the best solution...

This is actually problematic - not only performance-wise (since it requires locks for both the cache and the nsv), but also in terms of atomicity, leading to race conditions. If you want to expire old entries, use the -expire option of ns_cache when creating a cache or a single cache entry. If you want to report the time when an entry was added to the user, store a small dictionary containing the timestamp and value instead of the pure value. You can consider using nsv_dict to retrieve just the value.

The recommended scalable approach to caching is to use an API rather than raw SQL queries, which allows full control over update operations. For managing ns_caches, use ::acs::HashKeyPartitionedCache for non-numeric keys or ::acs::KeyPartitionedCache for numeric keys. One can specify the desired number of cache partitions either at creation time, or from e.g. the configuration file (using different sizes for development and production).

Usage example:

::acs::HashKeyPartitionedCache create ::acs::misc_cache \

-package_key acs-tcl \

-parameter MiscCache \

-default_size 100KB

set x [::acs::misc_cache eval -key foo-$id {

db_string .... {select ... from ... where ... = :id ...}

}]

::acs::misc_cache flush foo-$id

In OpenACS 5.10.1, we have additionally lock-free caches (for per_request_cache and per_thread_cache) with very similar interfaces.

So this NX-based stuff is a backwards compatibility wrapper for old AOLserver ns_cache calls? And OpenACS still uses those old AOLserver-style calls instead of the ns_cache_* API built into NaviServer? Which cache API should I use for future work?

Since OpenACS 5.10.1 requires NaviServer, it’s possible to remove the compatibility wrapper and use the underlying functions for all packages in the oacs-5-10 branch. However, retaining the wrapper still makes sense for legacy or site-specific packages to ease the transition to OpenACS 5.10.1.